Digits at Google Next 23

Hannes Hapke

Hannes Hapke

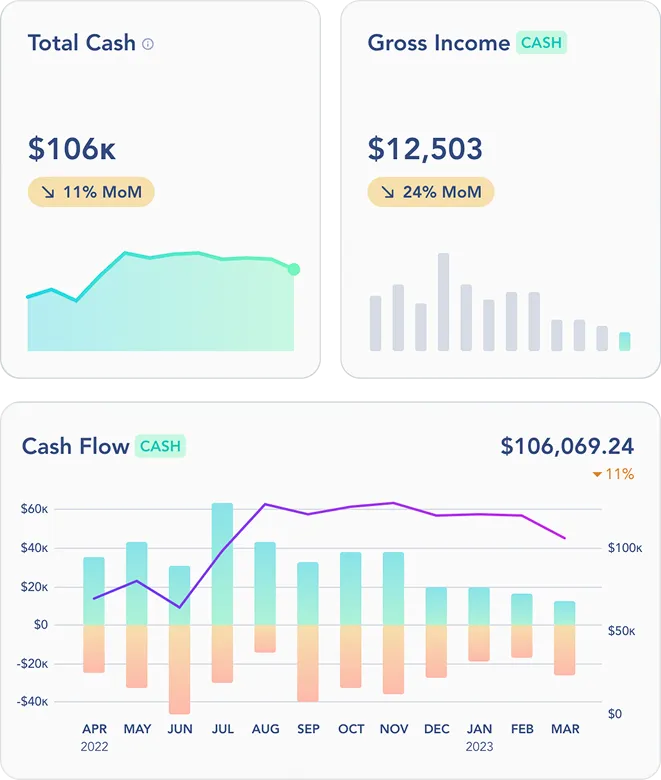

Every year, Google invites customers and major product partners to their Cloud conference, Google Next. After a multi-year in-person hiatus, Google Next returned in full force to San Francisco’s Moscone Center, and Digits was invited to present how we’ve collaborated with teams at Google to create Digits AI.

Given our experience with Vertex AI across many ML projects at Digits, presenting at Next provided a unique opportunity to showcase how we have been working to push finance and accounting software forward, and also share our experiences in developing machine learning and AI using Google Cloud products.

🤖 Getting Early Access

In the weeks leading up to the conference, our engineering team received early and exclusive access to Google Cloud’s latest release of their Vertex Python SDK. This allows remote execution of machine learning model training or model analysis, all controlled via a local Jupyter notebook. In the coming weeks, we’ll share a more in-depth post, with detailed explanations and feedback on our experience using the new product. But for now, we’ve included a summary of our initial findings as well as a video of our talk at Google Next where we discussed our experiences.

Initial Learnings

Vertex AI has been a fundamental element in building lean machine learning projects here at Digits. We’ve outlined some of the various use cases which were also discussed in more detail during our Next talk:

- Vertex Pipelines → Any machine learning model in production is trained, evaluated and registered via CI-driven ML pipelines.

- Vertex Metadata Store → During the model training, any produced pipeline artifact (e.g. the training set, or the preprocessed training data is archived through the metadata store).

- Vertex Model Registry → Any positively evaluated, trained machine learning model produced by our machine learning pipelines is registered in a one-stop shop for future consumption.

- Vertex Online Prediction Endpoints → Data pipelines or backend APIs can access the machine learning models through batch processes or online prediction endpoints.

- Vertex Matching Streaming Enginex → Generated embeddings are made available through the embedding database service in Vertex, called matching engine.

Presenting at Google Next is an experience that outlines the true value of sharing information and learning from others in the industry. This event gave us a platform to share our knowledge with other customers and offer insights into our work and, conversely, we were privileged enough to glean wisdom from some of the industry’s most respected leaders in AI/ML as they shared their experiences and successes using Google products.

A special shout out is due to Sara Robinson, Chris Cho, Melanie Ratchford, and Esther Kim for this tremendous opportunity. We are already looking forward to next year's event in Las Vegas.